A tweet I posted a few days a go generated quite a bit of interest from people running or managing their services, and I thought I would share some of the cool things we are working on.

1Password servers will be down for the next few hours. We are recreating our entire environment to replace AWS CloudFormation with @HashiCorp Terraform. It is like creating a brand new universe, from scratch. - @roustem View tweet

This post will go into technical details and I apologize in advance if I explain things too quickly. I tried to make up for this by including some pretty pictures but most of them ended up being code snippets. 😊

1Password and AWS

1Password is hosted by Amazon Web Services (AWS). We’ve been using AWS for several years now, and it is incredible how easy it was to scale our service from zero users three years ago to several million happy customers today.

AWS has many geographical regions. Each region consists of multiple independent data centres located closely together. We are currently using three regions:

- N. Virginia, USA

us-east-1 - Montreal, Canada

ca-central-1 - Frankfurt, Germany

eu-central-1

In each region we have four environments running 1Password:

- production

- staging

- testing

- development

If you are counting, that’s 12 environments across three regions, including three production environments: 1password.com, 1password.ca, and 1password.eu.

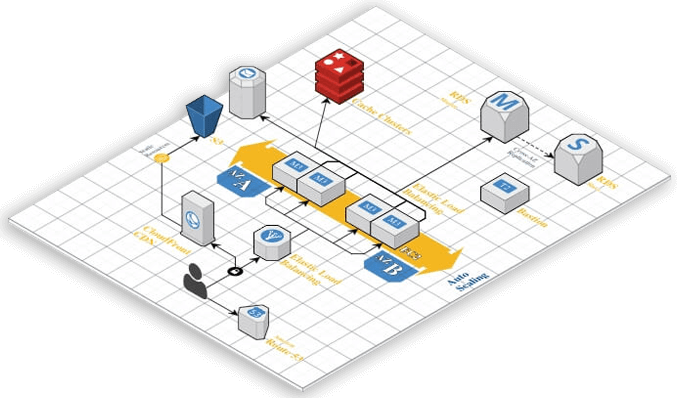

Every 1Password environment is more or less identical and includes these components:

- Virtual Private Cloud

- Amazon Aurora database cluster

- Caching (Redis) clusters

- Subnets

- Routing tables

- Security roles

- IAM permissions

- Auto-scaling groups

- Elastic Compute Cloud (EC2) instances

- Elastic Load Balancers (ELB)

- Route53 DNS (both internal and external)

- Amazon S3 buckets

- CloudFront distributions

- Key Management System (KMS)

Here is a simplified diagram:

As you can see, there are many components working together to provide 1Password service. One of the reasons it is so complex is the need for high availability. Most of the components are deployed as a cluster to make sure there are at least two of each: database, cache, server instance, and so on.

Furthermore, every AWS region has at least two data centres that are also known as Availability Zones (AZs) – you can see them in blue in the diagram above. Every AZ has its own independent power and network connections. For example, Canadian region ca-central-1 has two data centres: ca-central-1a and ca-central-1b.

If we deployed all 1Password components into just a single Availability Zone, then we would not be able to achieve high availability because a single problem in the data centre would take 1Password offline. This is why when 1Password services are deployed in a region, we make sure that every component has at least one backup in the neighbouring data centre. This helps to keep 1Password running even when there’s a problem in one of the data centres.

Infrastructure as Code

It would be very challenging and error-prone to manually deploy and maintain 12 environments, especially when you consider that each environment consists of at least 50 individual components.

This is why so many companies today switched from updating their infrastructure manually and embraced Infrastructure as Code. With Infrastructure as Code, the hardware becomes software and can take advantage of all software development best practices. When we apply these practices to infrastructure, every server, every database, every open network port can be written in code, committed to GitHub, peer-reviewed, and then deployed and updated as many times as necessary.

For AWS customers, two major languages could be used to describe and maintain the infrastructure:

CloudFormation is an excellent option for many AWS customers, and we successfully used it to deploy 1Password environments for over two years. At the same time we wanted to move to Terraform as our main infrastructure tool for several reasons:

- Terraform has a more straightforward and powerful language (HCL) that makes it easier to write and review code.

- Terraform has the concept of resource providers that allows us to manage resources outside of Amazon Web Services, including services like DataDog and PagerDuty, which we rely on internally.

- Terraform is completely open source and that makes it easier to understand and troubleshoot.

- We are already using Terraform for smaller web apps at AgileBits, and it makes sense to standardize on a single tool.

Compared to the JSON or YAML files used by CloudFormation, Terraform HCL is both a more powerful and a more readable language. Here is a small example of a snippet that defines a subnet for the application servers. As you can see, the Terraform code is a quarter of the size, more readable, and easier to understand.

CloudFormation

"B5AppSubnet1": {

"Type": "AWS::EC2::Subnet",

"Properties": {

"CidrBlock": { "Fn::Select" : ["0", { "Fn::FindInMap" : [ "SubnetCidr", { "Ref" : "Env" }, "b5app"] }] },

"AvailabilityZone": { "Fn::Select" : [ "0", { "Fn::GetAZs" : "" } ]},

"VpcId": { "Ref": "Vpc" },

"Tags": [

{ "Key" : "Application", "Value" : "B5" },

{ "Key" : "env", "Value": { "Ref" : "Env" } },

{ "Key" : "Name", "Value": { "Fn::Join" : ["-", [ {"Ref" : "Env"}, "b5", "b5app-subnet1"]] } }

]

}

},

"B5AppSubnet2": {

"Type": "AWS::EC2::Subnet",

"Properties": {

"CidrBlock": { "Fn::Select" : ["1", { "Fn::FindInMap" : [ "SubnetCidr", { "Ref" : "Env" }, "b5app"] }] },

"AvailabilityZone": { "Fn::Select" : [ "1", { "Fn::GetAZs" : "" } ]},

"VpcId": { "Ref": "Vpc" },

"Tags": [

{ "Key" : "Application", "Value" : "B5" },

{ "Key" : "env", "Value": { "Ref" : "Env" } },

{ "Key" : "Name", "Value": { "Fn::Join" : ["-", [ {"Ref" : "Env"}, "b5", "b5app-subnet2"]] } }

]

}

},

"B5AppSubnet3": {

"Type": "AWS::EC2::Subnet",

"Properties": {

"CidrBlock": { "Fn::Select" : ["2", { "Fn::FindInMap" : [ "SubnetCidr", { "Ref" : "Env" }, "b5app"] }] },

"AvailabilityZone": { "Fn::Select" : [ "2", { "Fn::GetAZs" : "" } ]},

"VpcId": { "Ref": "Vpc" },

"Tags": [

{ "Key" : "Application", "Value" : "B5" },

{ "Key" : "env", "Value": { "Ref" : "Env" } },

{ "Key" : "Name", "Value": { "Fn::Join" : ["-", [ {"Ref" : "Env"}, "b5", "b5app-subnet3"]] } }

]

}

},

Terraform

resource "aws_subnet" "b5app" {

count = "${length(var.subnet_cidr["b5app"])}"

vpc_id = "${aws_vpc.b5.id}"

cidr_block = "${element(var.subnet_cidr["b5app"],count.index)}"

availability_zone = "${var.az[count.index]}"

tags {

Application = "B5"

env = "${var.env}"

type = "${var.type}"

Name = "${var.env}-b5-b5app-subnet-${count.index}"

}

}

Terraform has another gem of a feature that we rely on: terraform plan. It allows us to visualize the changes that will happen to the environment without performing them.

For example, here is what would happen if we change the server instance size from t2.medium to t2.large.

Terraform Plan Output

#

# Terraform code changes

#

# variable "instance_type" {

# type = "string"

# - default = "t2.medium"

# + default = "t2.large"

# }

$ terraform plan

Refreshing Terraform state in-memory prior to plan...

...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

-/+ destroy and then create replacement

Terraform will perform the following actions:

-/+ module.b5site.aws_autoscaling_group.asg (new resource required)

id: "B5Site-prd-lc20180123194347404900000001-asg" => (forces new resource)

arn: "arn:aws:autoscaling:us-east-1:921352000000:autoScalingGroup:32b38032-56c6-40bf-8c57-409e9e4a264a:autoScalingGroupName/B5Site-prd-lc20180123194347404900000001-asg" =>

default_cooldown: "300" =>

desired_capacity: "2" => "2"

force_delete: "false" => "false"

health_check_grace_period: "300" => "300"

health_check_type: "ELB" => "ELB"

launch_configuration: "B5Site-prd-lc20180123194347404900000001" => "${aws_launch_configuration.lc.name}"

load_balancers.#: "0" =>

max_size: "3" => "3"

metrics_granularity: "1Minute" => "1Minute"

min_size: "2" => "2"

name: "B5Site-prd-lc20180123194347404900000001-asg" => "${aws_launch_configuration.lc.name}-asg" (forces new resource)

protect_from_scale_in: "false" => "false"

tag.#: "4" => "4"

tag.1402295282.key: "Application" => "Application"

tag.1402295282.propagate_at_launch: "true" => "true"

tag.1402295282.value: "B5Site" => "B5Site"

tag.1776938011.key: "env" => "env"

tag.1776938011.propagate_at_launch: "true" => "true"

tag.1776938011.value: "prd" => "prd"

tag.3218409424.key: "type" => "type"

tag.3218409424.propagate_at_launch: "true" => "true"

tag.3218409424.value: "production" => "production"

tag.4034324257.key: "Name" => "Name"

tag.4034324257.propagate_at_launch: "true" => "true"

tag.4034324257.value: "prd-B5Site" => "prd-B5Site"

target_group_arns.#: "2" => "2"

target_group_arns.2352758522: "arn:aws:elasticloadbalancing:us-east-1:921352000000:targetgroup/prd-B5Site-8080-tg/33ceeac3a6f8b53e" => "arn:aws:elasticloadbalancing:us-east-1:921352000000:targetgroup/prd-B5Site-8080-tg/33ceeac3a6f8b53e"

target_group_arns.3576894107: "arn:aws:elasticloadbalancing:us-east-1:921352000000:targetgroup/prd-B5Site-80-tg/457e9651ad8f1af4" => "arn:aws:elasticloadbalancing:us-east-1:921352000000:targetgroup/prd-B5Site-80-tg/457e9651ad8f1af4"

vpc_zone_identifier.#: "2" => "2"

vpc_zone_identifier.2325591805: "subnet-d87c3dbc" => "subnet-d87c3dbc"

vpc_zone_identifier.3439339683: "subnet-bfe16590" => "subnet-bfe16590"

wait_for_capacity_timeout: "10m" => "10m"

-/+ module.b5site.aws_launch_configuration.lc (new resource required)

id: "B5Site-prd-lc20180123194347404900000001" => (forces new resource)

associate_public_ip_address: "false" => "false"

ebs_block_device.#: "0" =>

ebs_optimized: "false" =>

enable_monitoring: "true" => "true"

iam_instance_profile: "prd-B5Site-instance-profile" => "prd-B5Site-instance-profile"

image_id: "ami-263d0b5c" => "ami-263d0b5c"

instance_type: "t2.medium" => "t2.large" (forces new resource)

key_name: "" =>

name: "B5Site-prd-lc20180123194347404900000001" =>

name_prefix: "B5Site-prd-lc" => "B5Site-prd-lc"

root_block_device.#: "0" =>

security_groups.#: "1" => "1"

security_groups.4230886263: "sg-aca045d8" => "sg-aca045d8"

user_data: "ff8281e17b9f63774c952f0cde4e77bdba35426d" => "ff8281e17b9f63774c952f0cde4e77bdba35426d"

Plan: 2 to add, 0 to change, 2 to destroy.

Overall, Terraform is a pleasure to work with, and that makes a huge difference in our daily lives. DevOps people like to enjoy their lives too. 🙌

Migration from CloudFormation to Terraform

It is possible to simply import the existing AWS infrastructure directly into Terraform, but there are certain downsides to it. We found that naming conventions are quite different and that would make it more challenging to maintain our environments in the future. Also, a simple import would not allow us to use the new Terraform features. For example, instead of hard-coding the identifiers of Amazon Machine Images used for deployment we started using aws_ami to find the most recent image dynamically:

aws_ami

data "aws_ami" "bastion_ami" {

most_recent = true

filter {

name = "architecture"

values = ["x86_64"]

}

filter {

name = "name"

values = ["bastion-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

name_regex = "bastion-.*"

owners = [92135000000]

}

It took us a couple of weeks to write the code from scratch. After we had the same infrastructure described in Terraform, we recreated all non-production environments where downtime wasn’t an issue. This also allowed us to create a complete checklist of all the steps required to migrate the production environment.

Finally, on January 21, 2018, we completely recreated 1Password.com. We had to bring the service offline during the migration. Most of our customers were not affected by the downtime because the 1Password apps are designed to function even when the servers are down or when an Internet connection is not available. Unfortunately, our customers who needed to access the web interface during that time were unable to do so, and we apologize for the interruption. Most of the 2 hours and 39 minutes of downtime were related to data migration. The 1Password.com database is just under 1TB in size (not including documents and attachments), and it took almost two hours to complete the snapshot and restore operations.

We are excited to finally have all our development, test, staging, and production environments managed with Terraform. There are many new features and improvements we have planned for 1Password, and it will be fun to review new infrastructure pull requests on GitHub!

I remember when we were starting out we hosted our very first server with 1&1. It would have taken weeks to rebuild the very simple environment there. The world has come a long way since we first launched 1Passwd 13 years ago. I am looking forward to what the next 13 years will bring! 😀

Questions

A few questions and suggestions about the migration came up on Twitter:

By “recreating” you mean building out a whole new VPC with Terraform? Couldn’t you build it then switch existing DNS over for much less down time?1

This is pretty much what we ended up doing. Most of the work was performed before the downtime. Then we updated the DNS records to point to the new VPC.

Couldn’t you’ve imported all online resources? Just wondering.2

That is certainly possible, and it would have allowed us to avoid downtime. Unfortunately, it also requires manual mapping of all existing resources. Because of that, it’s hard to test, and the chance of a human error is high – and we know humans are pretty bad at this. As a wise person on Twitter said: “If you can’t rebuild it, you can’t rebuild it“.

If you have any questions, let us know in the comments, or ask me (@roustem) and Tim (@stumyp), our Beardless Keeper of Keys and Grounds, on Twitter.

by Roustem Karimov on

by Roustem Karimov on