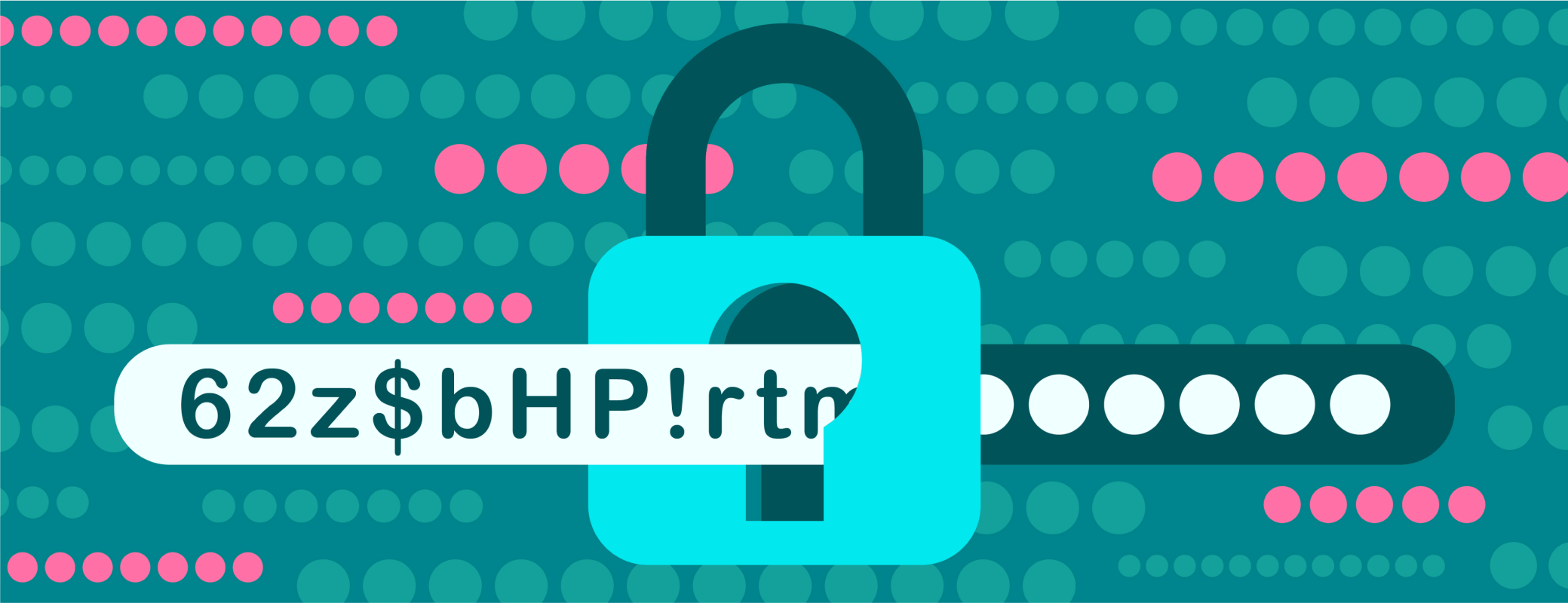

Less than six months ago, artificial intelligence (AI) was largely considered to be in its infancy and primarily used for niche applications, like editing photos and keeping your home at a comfortable temperature. But that’s all changed. Since OpenAI introduced GPT-3.5 in November 2022, the possibilities of generative AI have come to dominate the popular imagination.

And with good reason: ChatGPT-4 not only outperforms 90% of law students taking the bar exam, it also ranks highly for dozens of specialized tests ranging from economics to writing. Over the last few weeks, you’ve probably seen convincing images of famous people, heard catchy songs by popular artists, and read articles all completely generated by AI models.

Excited by the untapped potential, many developers are jumping in and building new apps that integrate with OpenAI. Unfortunately, in their enthusiasm to create and share, many of these developers are accidentally giving attackers the opportunity to rack up thousands of dollars on their credit cards.

The good news? These kinds of attacks are completely preventable. If you’re interested in building with AI, but want to avoid this problem, keep reading.

How are attackers pirating OpenAI accounts?

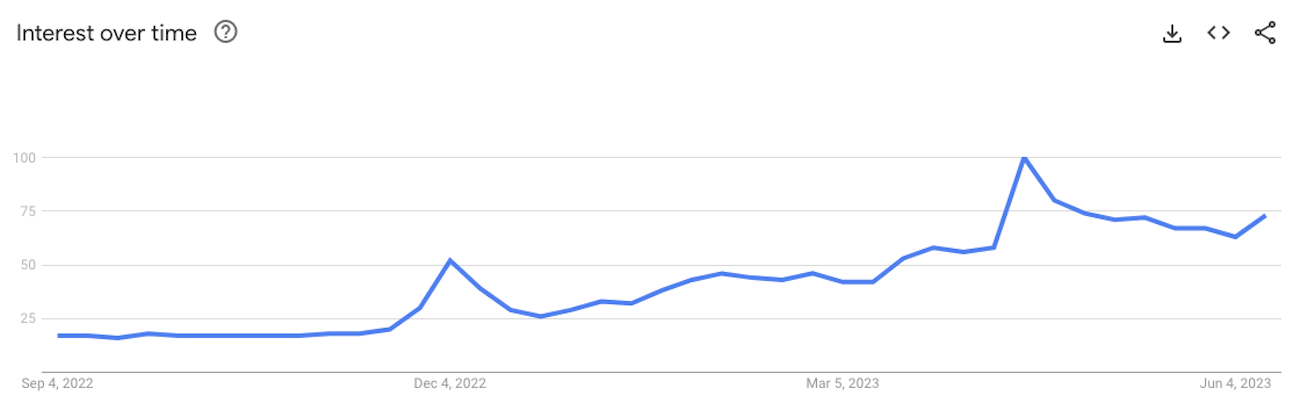

OpenAI offers an application programming interface (API) that enables developers to leverage GPT-4 and other models in their own projects. To use the API, developers need to add a credit card to their accounts so they can be billed based on how much they use the system.

Developers can then generate and use API keys to connect the projects they are building to their OpenAI accounts. Each key is essentially a credential like a password. This is a standard way to integrate third-party services into an application.

But here’s the problem: many developers are referencing their OpenAI API keys directly in their code. That means their keys are exposed whenever they share their projects, which is very common in the budding AI community.

Attackers are always scanning public repositories for unprotected keys, and when they find them, they can easily use them in their own unauthorized projects. Stealing another person’s API key in this way means they don’t have to pay anything. OpenAPI doesn’t know the theft has taken place and charges the owner of the API key – the developer – for the additional API usage.

The end result is potentially devastating charges for the actual account owners.

Why does it matter?

Leaking developer secrets like API keys in code isn’t a new problem. It’s a growing issue that has led to significant breaches and financial losses for major brands and individual developers alike. Whether you’re working with OpenAI or a different service, protecting your API keys should be a top priority.

Securing workflows is critical not only for developers and enterprise businesses, but also for consumers and technology enthusiasts. We’ve already seen a wave of people who aren’t traditional developers flock to OpenAI and create interesting projects. That number is only going to grow as organizations like OpenAI find ways to make AI even more accessible to users.

If you’re experimenting with AI services, it’s important that you equip yourself with tools like 1Password that allow you to secure your API keys and other sensitive information.

How you can protect developer secrets with 1Password

Hardcoding secrets like API keys into your projects is never a good idea. It’s how most secrets are leaked. To avoid this, many developers use environment variables to save secrets in separate files and only include references to the secrets in code. This is a safer approach but can lead to ‘secrets sprawl’ where it’s hard to track down where different secrets are stored. The problem only gets worse when you’re moving a project through different environments or collaborating with a team.

1Password offers a better way for you to manage your secrets. Take a look at this practical demo of how you can use 1Password Service Accounts and Command-line Interface (CLI) to protect your OpenAI API keys:

This demo shows a good example of how you can secure your API keys and other development secrets in encrypted 1Password vaults. Using the 1Password CLI, you can then replace hardcoded secrets in your code with references that point to where the keys are stored in 1Password. At runtime, these references securely and automatically switch out for the actual API key values.

1Password Service Accounts give you even more control by enabling you to limit your app’s access to specific vaults in 1Password, and controlling the actions your apps can perform. Keeping all of your secrets in a single source of truth that syncs across devices makes it easier to manage them throughout the software development lifecycle, rotate them when needed, and, if you’re working for an organization, securely share them with other members of your team.

Thanks to 1Password, your code no longer contains any secrets directly, including your OpenAI API key.

Let’s go back to the OpenAI scam that attackers have been running recently. Thanks to 1Password, your code no longer contains any secrets directly, including your OpenAI API key. Instead, it just includes references to where those secrets are stored in 1Password. So when you share the project on a public repository, an attacker can’t look through your code and steal the key.

So, what’s next?

We can say with confidence that artificial intelligence is here to stay. It’s likely to have a major impact across industries and many aspects of our daily lives over the next few years, so we need to learn to protect ourselves as we build with this new technology.

If you’re one of the people exploring AI tools, or thinking of joining the expanding AI community, it’s critical that you take steps to secure your sensitive information. With 1Password it’s much easier to keep attackers from exploiting your credit cards and other secrets.

by Micah Neidhart on

by Micah Neidhart on