At this point, it’s almost cliché to say “AI is here, and it is changing everything.” Whether it’s accelerating productivity or reshaping employee workflows, AI is ushering in a new era of operational possibilities. But as we all know, beneath this transformation lies a complex and evolving security challenge.

As AI introduces new risks, we’re taking stock of the state of mind of security leaders as they are tackling these new challenges to identify where tangible solutions are most needed. To do this, we commissioned a survey of 200 North American security leaders, which revealed a core tension stemming from AI and the lack of meaningful security controls.

Dave Lewis, Global Advisory CISO at 1Password, has been speaking with security leaders around the world and found that there is a shared concern over the deluge of AI tools entering their environments. Lewis said, “My favourite quote was from a CISO in the EU who said to me, ‘We have closed the door to AI tools and projects, but they keep coming through the window!’ Governance over AI is severely lacking in many corporate environments.”

The survey highlights four critical challenges and what security leaders should consider to secure today’s AI-augmented workforce.

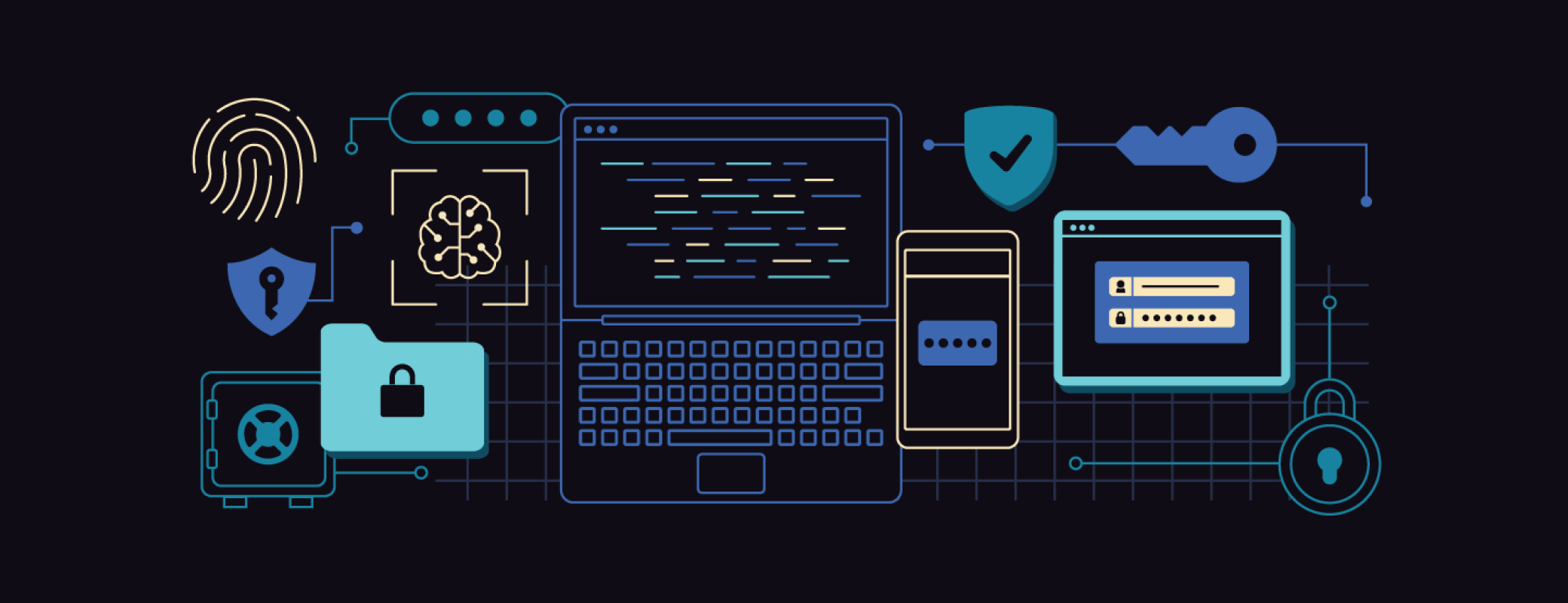

Challenge 1: Limited visibility into AI tool usage

Only 21% of security leaders say they have full visibility into AI tools used in their organization.

Lack of visibility into applications and usage is something that security teams have been tackling for years. This problem is exacerbated by employee adoption of AI tools, such as generative AI. Even when policies are in place, the lack of visibility into which AI apps are in use makes it extremely difficult to enforce those policies. These policies can be the difference between your corporate data being used to train public LLMs and maintaining control of it.

The adoption of unsanctioned AI tools by employees is a major contributor to the Access-Trust Gap. AI is widening this gap and making it more complicated for security leaders to ensure their organization’s security. So, how do you estimate and reduce the risk when you can’t see it?

To effectively mitigate the visibility challenges introduced by AI, security leaders should take action:

- Document and understand how employees are using and plan to use AI in their daily workflows.

- Use SaaS governance or device trust tools to help identify AI usage.

- Evaluate where new or additional policies need to be enforced, such as blocking specific generative AI tools.

Challenge 2: AI and security policy enforcement

54% of security leaders say their AI governance enforcement is weak.

32% believe up to half of employees continue to use unauthorized AI applications.

Implementing and enforcing security policies has long been a cybersecurity challenge. When it comes to AI, governance is a priority. Policies have been written. Frameworks are being drafted. Yet in practice, AI tool usage often remains uncontrolled.

When it comes to AI, one of the core issues is that adoption is outpacing our ability to secure it. Even the best security policies cannot be effective if they can’t be enforced. Organization leaders need to discover AI tools in use and decide how strongly they want to enforce it, and in what forms: monitoring, blocking, or taking personnel actions. This is what enables the business to embrace the secure and responsible use of AI.

To establish effective AI governance, security leaders should take the following steps:

- Embed security policy enforcement into your organization’s overall AI adoption strategy.

- Collaborate with business partners to proactively identify and assess the use of AI, discussing the business risk with stakeholders such as the legal department.

- Evaluate and adopt tools that enable you to monitor and block unauthorized AI use.

Challenge 3: Unintentional exposure via AI access

63% of security leaders believe the biggest internal security threat is that their employees have unknowingly given AI access to sensitive data.

Employees are increasingly adopting AI and providing these tools with corporate data, often with minimal oversight. Given that AI depends on this data, the lack of oversight can put organizations at significant risk of losing control of their data.

The issue isn’t malicious intent. Employees may unintentionally or unknowingly provide access to sensitive data to AI tools, creating a variety of risks and potential compliance violations. This is especially true if the data is being added to AI tools that incorporate it into their public LLMs by default.

Mitigating the risk of unintentional data exposure requires an organization-wide framework for secure data usage in AI environments. Security leaders should operationalize the following:

- Define what constitutes acceptable data sharing with AI tools. Unless you are absolutely sure that the AI tool is keeping all data within your own infrastructure, assume that it’s the same as posting it on social media.

- Deploy and track training programs that help employees recognize the risks of uploading corporate data to AI tools.

- Design user-friendly guardrails that will protect corporate data without slowing down work.

Challenge 4: Unmanaged AI

More than half of security leaders (56%) estimate that between 26% and 50% of their AI tools and agents are unmanaged.

As employees adopt AI, many apps and AI agents may be interacting with business systems without going through formal identity or access governance. That means employees may be giving AI agents their credentials, hard-coding credentials during development, or even providing direct connections between AI tools and sensitive systems.

Traditional identity and access management models were not designed with this in mind. The outcome is a risk and compliance nightmare, as without proper governance, it becomes extremely difficult to understand how access is being used or to keep audit trails for compliance.

To mitigate risks introduced by unmanaged AI tools and agents, organizations must evolve their access governance strategies. Security leaders should take the following actions:

- Extend access management and governance to include AI agents.

- Create clear guidelines for how AI agents and tools should be provisioned access and how it will be managed, including how it will be tracked, recertified, and revoked.

- Ensure that you have the ability to audit the actions of AI tools and agents in order to meet compliance requirements.

Securing AI: The path forward

This research makes one thing clear: security leaders are aware of the risks posed by AI, and they are under-equipped to address them. As AI adoption accelerates, the absence of visibility, governance, and control over AI tools and agents leaves organizations exposed. The good news? There’s a path forward. Securing AI doesn’t mean slowing it down—it means enabling it with confidence. At 1Password, we believe the future of work depends on extending trust-based security to every identity, human or machine. It’s time to stop playing catch-up and start building security strategies that keep pace with AI. Learn more about how 1Password can help you secure the use of AI and AI agents, enabling employees to get the productivity benefits with minimal risk.

by 1Password on

by 1Password on