You may have heard about contact tracing apps, which are designed to help health authorities identify people who have been in contact with someone infected with SARS-CoV-2, the novel coronavirus which causes COVID-19. It’s natural to worry that such apps could be used to collect data about who you meet and where you go.

Fortunately, there is some clever technology that leaves the user in control and protects privacy, while giving individuals and health authorities the information they need. Apple and Google are introducing that technology to their phones.

I fully anticipate that I’ll enable the relevant app that uses this exposure notification technology when it becomes available, and that I’ll encourage others to, as well.

Privacy still matters

It’s reasonable to ask whether privacy preservation still matters when there is a pressing and compelling public health need for improved contact tracing. As we face the choice of whether to adopt privacy-preserving tracing apps, privacy-violating apps, or no apps at all, I’ll say a few words about why we should choose those that preserve privacy.

The data that these apps might gather could include where you travel, who you come into contact with, and some of your health information. That data, on perhaps millions of people, will have to be stored somewhere. Even well-intentioned holders of such data would have an enormous burden to protect it; to ensure that it’s only used for its intended purposes, and in ways that don’t reveal anything more than it should. We can’t rely on all holders of such data to be well intentioned.

So I’m delighted that Google and Apple are providing these tools. They enable health authorities to distribute apps without putting themselves in a position to have to defend such a rich trove of data. As you will see below, the scheme is set up so contact tracing secrets don’t need to be stored in any central location.

How it works

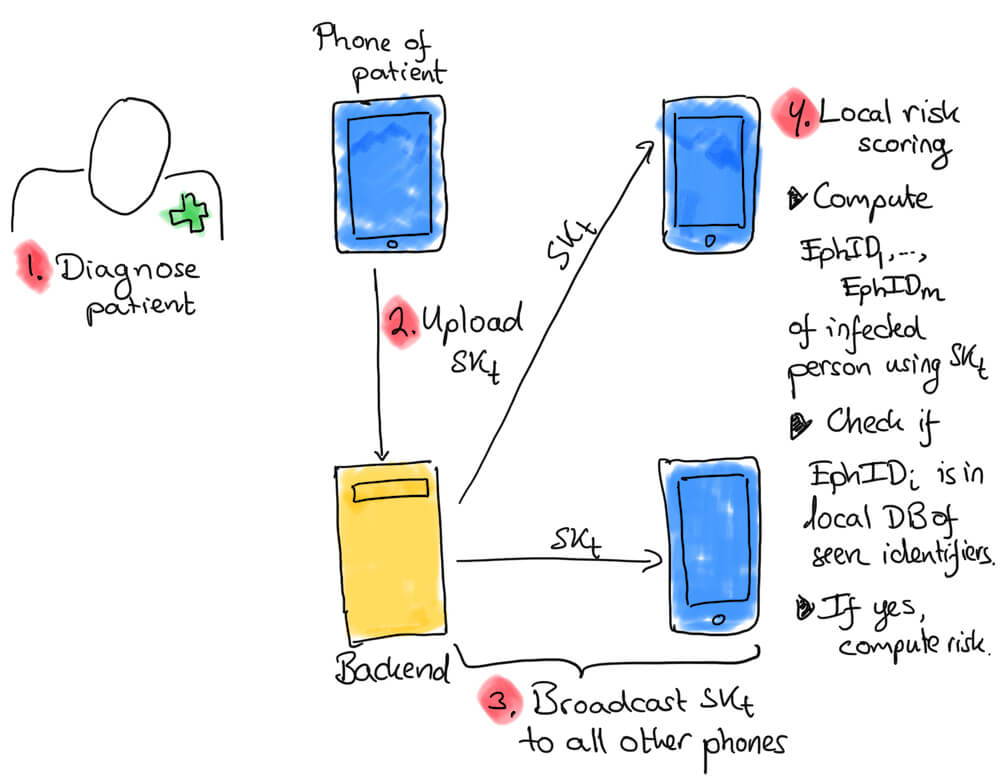

I’ve been using the term “contact tracing” a bit loosely. The technology that Apple and Google are rolling out is more properly called exposure notification. It is based on Decentralized Privacy-Preserving Proximity Tracing (DP-3T). The Apple/Google scheme is based on an older version of DP–3T, and would work something like this. (Because the diagrams come from DP–3T’s documentation, I’ll use their terminology.)

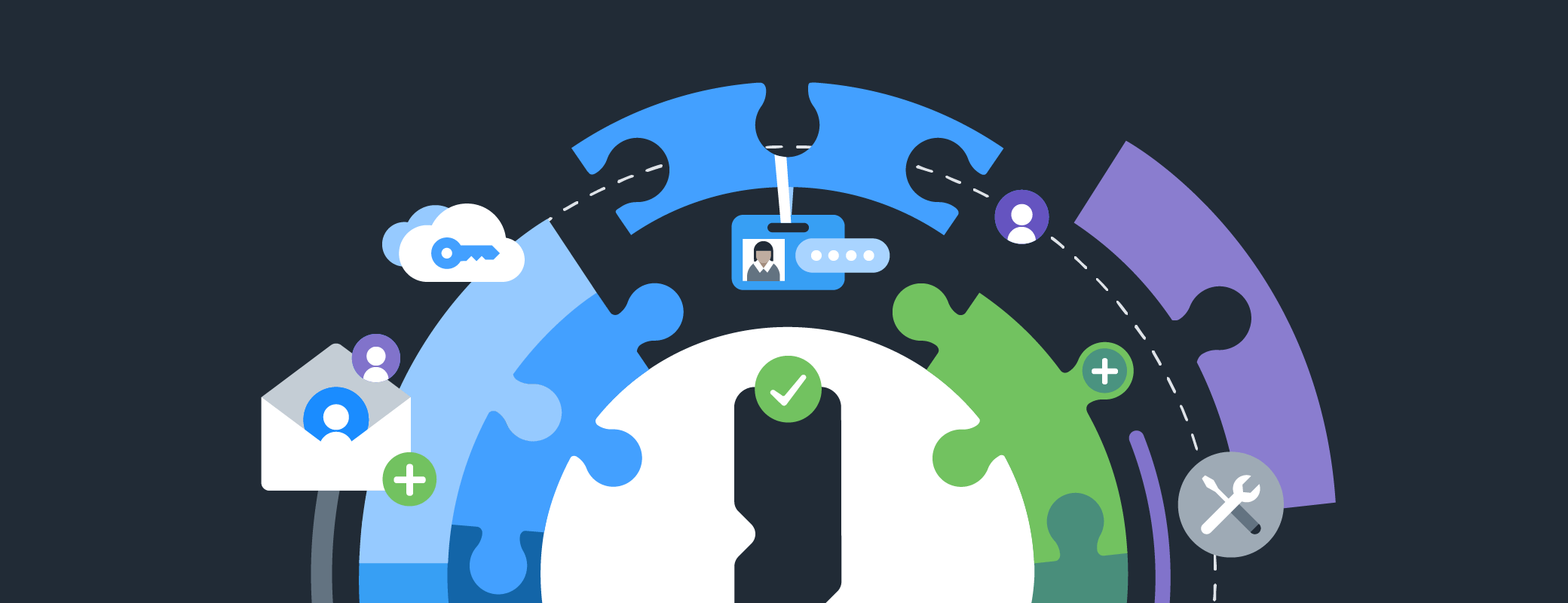

Patty and Molly (who may not know each other) both install and enable the app from their health authority.

As Patty and Molly go about their business, their phones send and receive Ephemeral Identifiers (EphID) to and from other enrolled phones nearby. These EphIDs are changed every 10 minutes.

On Saturday afternoon, they come within Bluetooth distance of each other (a few meters).

Now Patty and Molly (and others) are running around with lots of these Ephemeral IDs from the day they were received. They are designed to be small, and are purged every 14 days – so even if you are in Bluetooth range of a hundred other devices every 10 minutes, only a few megabytes of data need to be stored.

The Ephemeral IDs are actually generated using each user’s day key. Molly will create a new secret day key each day, as will Patty and every other user. There is no way to figure out the day key from the EphIDs. In the diagrams, the day key is labelled “SKt”.

Patty gets sick

If nobody gets sick, none of the information needs to go anywhere. But if Patty is diagnosed with COVID-19 the following Wednesday, she will be asked to share her day keys for previous days (at most 14 days). Note that the day keys have never been shared with other devices, and there is nothing secret about them - they tell nothing about Patty’s movements or activities, much less actually identify her.

The health authority maintains and publishes a database of day keys for people like Patty. In Patty’s case, it will include the day keys (along with the dates) for the period they believe she could have been infectious.

The backend server will periodically send updates to all the phones of these day keys (and which days they correspond to), so Molly’s phone will get Patty’s day key from the previous Saturday. Molly’s app will then use Patty’s day key to perform a cryptographic verification check on the Ephemeral IDs she has for that day.

If Molly’s phone finds a match for an EphID, it still needs to figure out whether she was around Patty long enough and close enough for this to be worrisome. In the Apple/Google scheme, Molly’s phone will send the day of the contact, the duration of the contact, and the strength of the Bluetooth signal back to the server. The server will use a tuned algorithm to determine risk scoring. If they believe Patty was very infectious, or Molly and Patty were close to each other for a long period, it will tell Molly’s phone that this was a possible exposure. Molly’s app will then notify Molly and tell her who to contact and what to do next.

The Apple/Google scheme differs significantly from the latest version of the DP–3T at this juncture. With the DP–3T scheme, the risk score is computed on Molly’s phone, and the backend server updates the various phones on how to compute the risk score.

Understanding privacy

My fear is that people won’t believe this system is privacy preserving. They will hear that this is a Google/Apple scheme and will incorrectly assume that a great deal of data is given to those companies when that’s not the case. People are also likely to falsely assume that health authorities using this can track everyone’s movements. After all, without the cleverness of the key and ID generation, along with a way to verify an Ephemeral ID when a day key is published, it would seem impossible to have something that works without having to collect a great deal of information about people’s movements.

Another difficulty with the public understanding of this is that most people will not be able to distinguish between which apps use good privacy-preserving mechanisms and which ones don’t. I hope, however, that this article will help you understand that these sorts of things can be built with strong privacy protections.

by Jeffrey Goldberg on

by Jeffrey Goldberg on